When you execute a mysqldump of a single database, all tables are dumped in alphabetical order.

Naturally, the reload of the mysqldump into a database would also be in alphabetical order.

You could just do a SHOW PROCESSLIST; and find out the DB Connection running the mysqldump. When the dump is reloaded, the DB Connection will vanish.

If you want to know what tables are in the dumpfile, run this against foobar.sql

cat foobar.sql | grep "^CREATE TABLE" | awk '{print $3}'

UPDATE 2012-05-02 13:53 EDT

Sorry for not noticing that there is only one table.

If the table is MyISAM, the only way to monitor is from the OS point of view. The reason? The table is write-locked throughout the reload. What do you look for? The size of the .MYD and .MYI files. Of course, you need to compare that with what the table size was before on the other DB server you imported from.

If the table is InnoDB and you have innodb_file_per_table enabled, the only way to monitor is from the OS point of view. The reason? The table is write-locked throughout the reload. What do you look for? The size of the .ibd file. Of course, you need to compare that with what the table size was before on the other DB server you imported from.

If the table is InnoDB and you have innodb_file_per_table disabled, not even the OS point of view can help.

UPDATE 2012-05-02 13:56 EDT

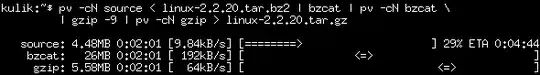

I addressed something like this last year : How do I get % progress for "type db.sql | mysql"

UPDATE 2012-05-02 14:09 EDT

Since a standard mysqldump write-locks the table like this:

LOCK TABLES `a` WRITE;

/*!40000 ALTER TABLE `a` DISABLE KEYS */;

INSERT INTO `a` VALUES (123),(451),(199),(0),(23);

/*!40000 ALTER TABLE `a` ENABLE KEYS */;

UNLOCK TABLES;

then, there is no way to get a progress from with mysql until the table lock is released.

If you can get LOCK TABLES and UNLOCK TABLES commented out of the dumpfile...

- if the table is MyISAM, SELECT COUNT(*) would work

- if the table is InnoDB, SELECT COUNT(*) would probably slow down/halt the load until count is done