So, it recently dawned on me that since I have 3 GPS clocks in my network, I could, technically, give back a little and serve time to the rest of the world. So far I've not quite seen any downsides with this ideas, but I have the following questions;

Can I virtualize this? I'm not going to spend money and time on standing up hardware for this, so virtualization is a must. Since the servers will have access to three stratum 1 sources, I can't see how this can be a problem provided the ntpd config is correct

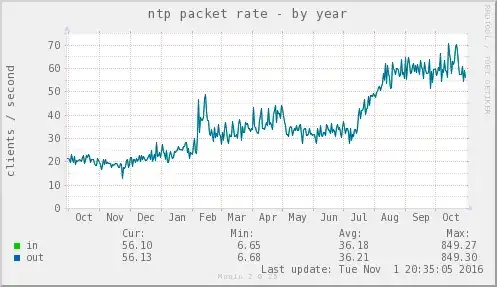

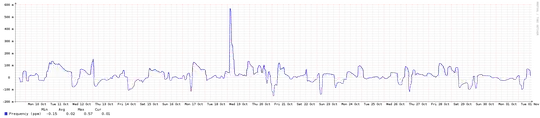

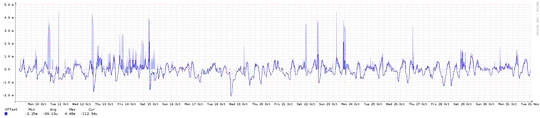

What kind of traffic do a public NTP server (part of pool.ntp.org) normally see? And how big VMs do I need for this? ntpd shouldn't be too resource intensive as far as I can gather, but I'd rather know beforehand.

What security aspects are there to this? I'm thinking just installing ntpd on two VMs in the DMZ, allow only ntp in through the FW, and only ntp out from the DMZ to the internal ntp servers. There also seem to be some ntp settings that are recommended according to the NTP pool page, but are they sufficient? https://www.ntppool.org/join/configuration.html

They recommend not having the LOCAL clock driver configured, is this equivalent to removing the LOCAL time source configuration from the config files?

Anything else to consider?