I am trying to understand the advantages of multiprocessing over threading. I know that multiprocessing gets around the Global Interpreter Lock, but what other advantages are there, and can threading not do the same thing?

-

9I think this can be useful in general: http://blogs.datalogics.com/2013/09/25/threads-vs-processes-for-program-parallelization/ Though there can be interesting thing depending on language. E.g. according to Andrew Sledge's link the python threads are slower. By java things are quite the opposite, java processes are much slower than threads, because you need a new jvm to start a new process. – inf3rno Feb 03 '16 at 09:17

-

7neither of the top two answers([current top](http://stackoverflow.com/a/3044626/52074), [second answer](http://stackoverflow.com/a/3046201/52074))covers the GIL in any significant way. here is an answer that does cover the GIL aspect: http://stackoverflow.com/a/18114882/52074 – Trevor Boyd Smith Oct 19 '16 at 15:35

-

@AndrasDeak can we close the other way around as per: https://meta.stackoverflow.com/questions/251938/should-i-flag-a-question-as-duplicate-if-it-has-received-better-answers since this has much more upvotes/answers? – Ciro Santilli OurBigBook.com Feb 28 '20 at 11:51

-

3@CiroSantilli the reason I chose this direction is because the answers on this question are terrible. The accepted answer has little substance, in the context of python it's unacceptable. The top-voted answer is better, but still lacks proper explanation. The dupe's accepted answer has a detailed explanation from one of the best contributors (and teachers) in the tag, actually explaining what the "GIL limitations" are and why you'd want to use either. I'd much prefer to keep the dupe in this direction. I think we discussed this in python chat, but I can ask for opinions there if you'd like. – Andras Deak -- Слава Україні Feb 28 '20 at 12:01

-

Especially since unregistered users are redirected from the dupe source to the target, I'd hate for them to find this post rather than abarnert's answer. – Andras Deak -- Слава Україні Feb 28 '20 at 12:02

-

@AndrasDeak OK, I'll copy my answer there then, since its the best XD – Ciro Santilli OurBigBook.com Feb 28 '20 at 12:03

-

2@CiroSantilli ah, I missed that you had an answer here! When I said "the answers [...] are terrible" I of course excluded present company ;) I think it'd be a lot better to have your answer there! – Andras Deak -- Слава Україні Feb 28 '20 at 12:05

12 Answers

Here are some pros/cons I came up with.

Multiprocessing

Pros

- Separate memory space

- Code is usually straightforward

- Takes advantage of multiple CPUs & cores

- Avoids GIL limitations for cPython

- Eliminates most needs for synchronization primitives unless if you use shared memory (instead, it's more of a communication model for IPC)

- Child processes are interruptible/killable

- Python

multiprocessingmodule includes useful abstractions with an interface much likethreading.Thread - A must with cPython for CPU-bound processing

Cons

- IPC a little more complicated with more overhead (communication model vs. shared memory/objects)

- Larger memory footprint

Threading

Pros

- Lightweight - low memory footprint

- Shared memory - makes access to state from another context easier

- Allows you to easily make responsive UIs

- cPython C extension modules that properly release the GIL will run in parallel

- Great option for I/O-bound applications

Cons

- cPython - subject to the GIL

- Not interruptible/killable

- If not following a command queue/message pump model (using the

Queuemodule), then manual use of synchronization primitives become a necessity (decisions are needed for the granularity of locking) - Code is usually harder to understand and to get right - the potential for race conditions increases dramatically

- 17,880

- 4

- 35

- 28

-

2It might be possible for multiprocessing to [yield a smaller memory footprint](http://stackoverflow.com/a/1316799/1658908) in the case of free lists. – Noob Saibot Jun 28 '14 at 17:12

-

61For multiprocess: "Takes advantage of multiple CPUs & cores". Does threading have this pro too? – Deqing Aug 21 '14 at 13:33

-

117@Deqing no it does not. In Python, because of GIL (Global Interpreter Lock) a single python process cannot run threads in parallel (utilize multiple cores). It can however run them concurrently (context switch during I/O bound operations). – Andrew Guenther Sep 05 '14 at 04:56

-

18@AndrewGuenther straight from the multiprocessing docs (emphasis mine): "The multiprocessing package offers both local and remote concurrency, **effectively side-stepping the Global Interpreter Lock** by using subprocesses instead of threads. Due to this, the multiprocessing module allows the programmer to fully leverage **multiple processors** on a given machine." – camconn Dec 13 '14 at 02:19

-

36@camconn "@AndrewGuenther straight from the **multiprocessing** docs" Yes, the **multiprocessing** package can do this, but not the **multithreading** package, which it what my comment was referring to. – Andrew Guenther Dec 14 '14 at 20:46

-

20

-

2Can you give an example of "cPython C extension modules that properly release the GIL will run in parallel"? – stenci Dec 18 '15 at 15:27

-

5A multiprocessing con: `logging` library does not work well with it. – Marco Sulla May 23 '16 at 12:32

-

@stenci psycopg2 (https://pypi.python.org/pypi/psycopg2) releases the GIL while waiting for response from postgres; and pretty much any C wrapper which uses the CFFI bridge will release the GIL on every C call (per http://cffi.readthedocs.io/en/latest/ref.html?highlight=GIL) -- this includes things like the bcrypt & argon2_cffi password hash libraries. – Eli Collins Jun 21 '16 at 21:56

-

Do CPython extensions make threads run in parallel? Please correct me if I'm wrong, my understanding is threads enable concurrency where as processes enable parallelism. (http://stackoverflow.com/questions/1050222/concurrency-vs-parallelism-what-is-the-difference) – opensourcegeek Jul 19 '16 at 09:16

-

14@camconn I think you want to say "Mea culpa", which would be "My fault". "Mea copa" is like a mix of Latin and Spanish, saying "My cup". – llevar Jan 16 '19 at 09:30

-

Depending on your equipment and usecase performance is likely to be similar. I did a performance test between threadpool and processpool, they each had to OCR 600 images with pytesseract and they both finished in 70 seconds. This was IO in the sense that you send it away and wait for it's response and it was CPU intensive in that OCR eats a lot of cycles. – hamsolo474 - Reinstate Monica Apr 08 '20 at 02:01

The threading module uses threads, the multiprocessing module uses processes. The difference is that threads run in the same memory space, while processes have separate memory. This makes it a bit harder to share objects between processes with multiprocessing. Since threads use the same memory, precautions have to be taken or two threads will write to the same memory at the same time. This is what the global interpreter lock is for.

Spawning processes is a bit slower than spawning threads.

-

223The GIL in cPython *does not* protect your program state. It protects the interpreter's state. – Jeremy Brown Jun 15 '10 at 14:19

-

53Also, the OS handles process scheduling. The threading library handles thread scheduling. And, threads share I/O scheduling -- which can be a bottleneck. Processes have independent I/O scheduling. – S.Lott Jun 15 '10 at 14:36

-

4how about IPC performance of multiprocessing? FOr a program which requires frequent share of objects among processes (e.g., through multiprocessing.Queue), what's the performance comparison to the in-process queue? – KFL Oct 21 '12 at 23:48

-

26There is actually a good deal of difference: http://eli.thegreenplace.net/2012/01/16/python-parallelizing-cpu-bound-tasks-with-multiprocessing/ – Andrew Sledge May 29 '13 at 11:36

-

4Is there a problem though if there are too many processes being spawned too often since the CPU might run out of processes/memory. But it can be the same in case of too many threads spawned too often but still lesser overhead than multiple processes. Right? – TommyT Feb 23 '15 at 20:13

-

2"Once they are running, there is not much difference." - Are you sure of that? Since they use the same memory space communication between threads can be much faster than communication between processes... – inf3rno Feb 03 '16 at 08:54

-

this answer ignores the GIL and therefore is not sufficient. here is an answer that covers the GIL aspect very well IMO: http://stackoverflow.com/a/18114882/52074 – Trevor Boyd Smith Oct 19 '16 at 15:34

-

2@S.Lott Are you sure about 'threading library in Python handles thread scheduling?'. [David Beazley](http://www.dabeaz.com/python/GIL.pdf) says "Python does not have a thread scheduler • There is no notion of thread priorities, preemption, round-robin scheduling, etc. • All thread scheduling is left to the host operating system (e.g., Linux, Windows, etc.)" Now i am confused which one is correct? – Sadanand Upase Oct 21 '17 at 18:14

-

It's a bit unrelated but I want to run a function in the background but I have some resource limitations and cannot run the function as many times that I want and want to queue the extra executions of the function. Do you have any idea on how I should do that? I have my question [here](https://stackoverflow.com/questions/49081260/executing-a-function-in-the-background-while-using-limited-number-of-cores-threa). Could you please take a look at my question and see if you can give me some hints (or even better, an answer) on how I should do that? – Amir Mar 03 '18 at 19:09

-

3There's a lot of problems with this answer, but what sticks out to me is `"Spawning processes is a bit slower than spawning threads. Once they are running, there is not much difference."` The truth is threads are a little anemic in python as compared to other languages due to the `Global Interpreter Lock`. Because of that lock, even though you've got multiple threads from the OS, everything will still process synchronously with multi-threading. If you're instituting parallelism correctly, you should see significant gains using multiprocessing. – Jamie Marshall Feb 27 '19 at 23:32

-

When using threading, precautions need to be taken only when thread works with a global variable. And not when it works with thread local or variable declared inside the thread function. Right? – variable Nov 06 '19 at 07:39

-

It's hard to communicate between different processes, in a local scope, and at the same time it's hard to communicate between threads, in a distributed scope. – Nov 16 '19 at 01:33

Threading's job is to enable applications to be responsive. Suppose you have a database connection and you need to respond to user input. Without threading, if the database connection is busy the application will not be able to respond to the user. By splitting off the database connection into a separate thread you can make the application more responsive. Also because both threads are in the same process, they can access the same data structures - good performance, plus a flexible software design.

Note that due to the GIL the app isn't actually doing two things at once, but what we've done is put the resource lock on the database into a separate thread so that CPU time can be switched between it and the user interaction. CPU time gets rationed out between the threads.

Multiprocessing is for times when you really do want more than one thing to be done at any given time. Suppose your application needs to connect to 6 databases and perform a complex matrix transformation on each dataset. Putting each job in a separate thread might help a little because when one connection is idle another one could get some CPU time, but the processing would not be done in parallel because the GIL means that you're only ever using the resources of one CPU. By putting each job in a Multiprocessing process, each can run on its own CPU and run at full efficiency.

- 3,814

- 5

- 34

- 56

- 5,941

- 5

- 26

- 32

-

2"but the processing would not be done in parallel because the GIL means that you're only ever using the resources of one CPU" GIL in multiprocessing how come .... ? – Nishant Kashyap Oct 12 '14 at 20:33

-

7@NishantKashyap - Reread the sentence you took that quote from. Simon is talking about the processing of multiple threads - it's not about multiprocessing. – ArtOfWarfare Jun 24 '15 at 21:19

-

On memory differences these are in a capEx up-front cost sense. OpEx (running) threads can be just as hungry as processes. You have control of both. Treat them as costs. – MrMesees Sep 07 '17 at 12:24

-

@ArtOfWarfare can you explain why the accepted answer assumes multithreaded parallelism can be achieved if the GIL 'releases properly'? – Loveen Dyall Jun 21 '19 at 17:21

-

@LoveenDyall - I'm not sure why you called me of all people out and are commenting on this answer instead of the one you're asking about, but that bullet point is talking about writing a Python extension in C. If you're dropping out of the Python Interpreter and into the land of native code, you can absolutely utilize multiple CPU cores without concern for the Global Interpreter Lock, because it is only going to lock the interpreter, not native code. Beyond that, I'm not sure what exactly they mean by releasing the GIL properly - I've never written Python extensions before. – ArtOfWarfare Jun 21 '19 at 18:01

Python documentation quotes

The canonical version of this answer is now at the dupliquee question: What are the differences between the threading and multiprocessing modules?

I've highlighted the key Python documentation quotes about Process vs Threads and the GIL at: What is the global interpreter lock (GIL) in CPython?

Process vs thread experiments

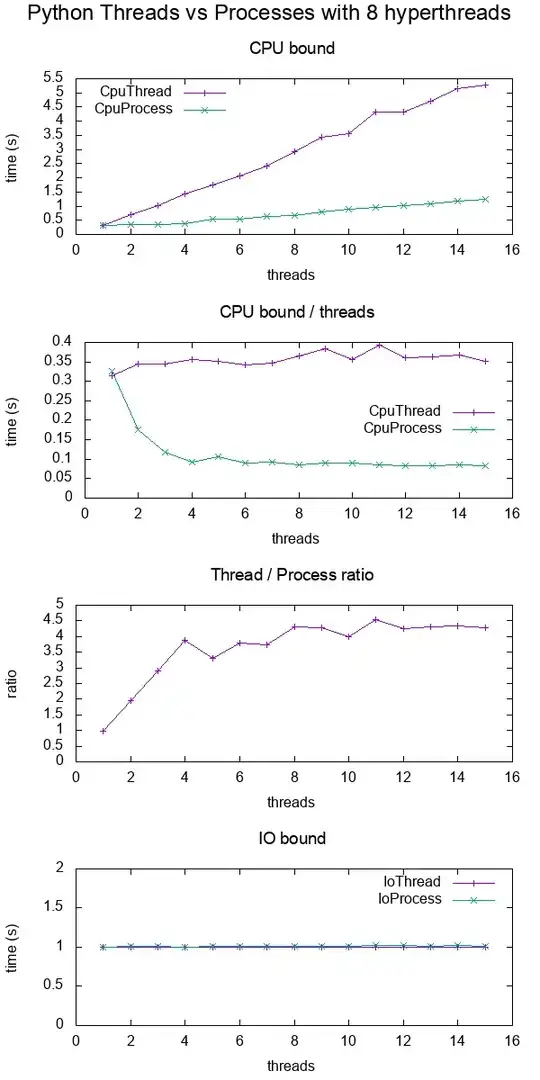

I did a bit of benchmarking in order to show the difference more concretely.

In the benchmark, I timed CPU and IO bound work for various numbers of threads on an 8 hyperthread CPU. The work supplied per thread is always the same, such that more threads means more total work supplied.

The results were:

Conclusions:

for CPU bound work, multiprocessing is always faster, presumably due to the GIL

for IO bound work. both are exactly the same speed

threads only scale up to about 4x instead of the expected 8x since I'm on an 8 hyperthread machine.

Contrast that with a C POSIX CPU-bound work which reaches the expected 8x speedup: What do 'real', 'user' and 'sys' mean in the output of time(1)?

TODO: I don't know the reason for this, there must be other Python inefficiencies coming into play.

Test code:

#!/usr/bin/env python3

import multiprocessing

import threading

import time

import sys

def cpu_func(result, niters):

'''

A useless CPU bound function.

'''

for i in range(niters):

result = (result * result * i + 2 * result * i * i + 3) % 10000000

return result

class CpuThread(threading.Thread):

def __init__(self, niters):

super().__init__()

self.niters = niters

self.result = 1

def run(self):

self.result = cpu_func(self.result, self.niters)

class CpuProcess(multiprocessing.Process):

def __init__(self, niters):

super().__init__()

self.niters = niters

self.result = 1

def run(self):

self.result = cpu_func(self.result, self.niters)

class IoThread(threading.Thread):

def __init__(self, sleep):

super().__init__()

self.sleep = sleep

self.result = self.sleep

def run(self):

time.sleep(self.sleep)

class IoProcess(multiprocessing.Process):

def __init__(self, sleep):

super().__init__()

self.sleep = sleep

self.result = self.sleep

def run(self):

time.sleep(self.sleep)

if __name__ == '__main__':

cpu_n_iters = int(sys.argv[1])

sleep = 1

cpu_count = multiprocessing.cpu_count()

input_params = [

(CpuThread, cpu_n_iters),

(CpuProcess, cpu_n_iters),

(IoThread, sleep),

(IoProcess, sleep),

]

header = ['nthreads']

for thread_class, _ in input_params:

header.append(thread_class.__name__)

print(' '.join(header))

for nthreads in range(1, 2 * cpu_count):

results = [nthreads]

for thread_class, work_size in input_params:

start_time = time.time()

threads = []

for i in range(nthreads):

thread = thread_class(work_size)

threads.append(thread)

thread.start()

for i, thread in enumerate(threads):

thread.join()

results.append(time.time() - start_time)

print(' '.join('{:.6e}'.format(result) for result in results))

GitHub upstream + plotting code on same directory.

Tested on Ubuntu 18.10, Python 3.6.7, in a Lenovo ThinkPad P51 laptop with CPU: Intel Core i7-7820HQ CPU (4 cores / 8 threads), RAM: 2x Samsung M471A2K43BB1-CRC (2x 16GiB), SSD: Samsung MZVLB512HAJQ-000L7 (3,000 MB/s).

Visualize which threads are running at a given time

This post https://rohanvarma.me/GIL/ taught me that you can run a callback whenever a thread is scheduled with the target= argument of threading.Thread and the same for multiprocessing.Process.

This allows us to view exactly which thread runs at each time. When this is done, we would see something like (I made this particular graph up):

+--------------------------------------+

+ Active threads / processes +

+-----------+--------------------------------------+

|Thread 1 |******** ************ |

| 2 | ***** *************|

+-----------+--------------------------------------+

|Process 1 |*** ************** ****** **** |

| 2 |** **** ****** ** ********* **********|

+-----------+--------------------------------------+

+ Time --> +

+--------------------------------------+

which would show that:

- threads are fully serialized by the GIL

- processes can run in parallel

- 347,512

- 102

- 1,199

- 985

-

Re: "threads only scale up to about 4x instead of the expected 8x since I'm on an 8 hyperthread machine." For CPU bound tasks, it should be expected that a 4 core machine max out at 4x. Hyper-threading only helps CPU context switching. (In most cases it only the "hype" that is effective. /joke) – Blaine Sep 16 '19 at 18:14

-

SO doesn't like dupe answers though, so you should probably consider deleting this instance of the answer. – Andras Deak -- Слава Україні Feb 28 '20 at 12:09

-

7@AndrasDeak I will leave it here because this page will be less good otherwise and certain links would break and I would lose hard earned rep. – Ciro Santilli OurBigBook.com Feb 28 '20 at 12:22

The key advantage is isolation. A crashing process won't bring down other processes, whereas a crashing thread will probably wreak havoc with other threads.

- 181,030

- 38

- 327

- 365

-

6Pretty sure this is just wrong. If a standard thread in Python ends by raising an exception, nothing will happen when you join it. I wrote my own subclass of thread which catches the exception in a thread and re-raises it on the thread that joins it, because the fact it was just ignores was really bad (lead to other hard to find bugs.) A process would have the same behavior. Unless by crashing you meant Python actual crashing, not an exception being raised. If you ever find Python crashing, that is definitely a bug that you should report. Python should always raise exceptions and never crash. – ArtOfWarfare Oct 16 '15 at 19:07

-

11@ArtOfWarfare Threads can do much more than raise an exception. A rogue thread can, via buggy native or ctypes code, trash memory structures anywhere in the process, including the python runtime itself, thus corrupting the entire process. – Marcelo Cantos Oct 17 '15 at 01:11

-

@jar from a generic point of view, Marcelo's answer is more complete. If the system is really critical, you should never rely in the fact that "things work as expected". With separate memory spaces, an overflow must happen in order to damage nearby processes, which is a more unlikely thing to happen than the situation exposed by marcelo. – DGoiko Jan 17 '19 at 00:41

As mentioned in the question, Multiprocessing in Python is the only real way to achieve true parallelism. Multithreading cannot achieve this because the GIL prevents threads from running in parallel.

As a consequence, threading may not always be useful in Python, and in fact, may even result in worse performance depending on what you are trying to achieve. For example, if you are performing a CPU-bound task such as decompressing gzip files or 3D-rendering (anything CPU intensive) then threading may actually hinder your performance rather than help. In such a case, you would want to use Multiprocessing as only this method actually runs in parallel and will help distribute the weight of the task at hand. There could be some overhead to this since Multiprocessing involves copying the memory of a script into each subprocess which may cause issues for larger-sized applications.

However, Multithreading becomes useful when your task is IO-bound. For example, if most of your task involves waiting on API-calls, you would use Multithreading because why not start up another request in another thread while you wait, rather than have your CPU sit idly by.

TL;DR

- Multithreading is concurrent and is used for IO-bound tasks

- Multiprocessing achieves true parallelism and is used for CPU-bound tasks

- 8,890

- 22

- 79

- 154

-

2

-

8@YellowPillow Let's say you're making multiple API calls to request some data, in this case the majority of the time is spent waiting on the network. As it awaits this network `I/O`, the `GIL` can be released to be used by the next task. However, the task will need to re-acquire the `GIL` in order to go to execute the rest of any python code associated with each API request, but, as the task is waiting for the network, it does not need to hold on to the `GIL`. – buydadip Oct 13 '18 at 20:26

Another thing not mentioned is that it depends on what OS you are using where speed is concerned. In Windows processes are costly so threads would be better in windows but in unix processes are faster than their windows variants so using processes in unix is much safer plus quick to spawn.

- 40,337

- 38

- 86

- 107

-

8Do you have actual numbers to back this up with? IE, comparing doing a task serially, then on multiple threads, then on multiple processes, on both Windows and Unix? – ArtOfWarfare Jun 24 '15 at 21:21

-

3Agree with @ArtOfWarfare question. Numbers? Do you recommend using Threads for Windows? – m3nda Oct 16 '15 at 11:31

-

The OS doesn't matter much because pythons GIL doesn't allow it to run multiple threads on a single process. Multiprocessing will be faster in Windows and Linux. – Viliami Mar 05 '19 at 23:04

Other answers have focused more on the multithreading vs multiprocessing aspect, but in python Global Interpreter Lock (GIL) has to be taken into account. When more number (say k) of threads are created, generally they will not increase the performance by k times, as it will still be running as a single threaded application. GIL is a global lock which locks everything out and allows only single thread execution utilizing only a single core. The performance does increase in places where C extensions like numpy, Network, I/O are being used, where a lot of background work is done and GIL is released.

So when threading is used, there is only a single operating system level thread while python creates pseudo-threads which are completely managed by threading itself but are essentially running as a single process. Preemption takes place between these pseudo threads. If the CPU runs at maximum capacity, you may want to switch to multiprocessing.

Now in case of self-contained instances of execution, you can instead opt for pool. But in case of overlapping data, where you may want processes communicating you should use multiprocessing.Process.

- 488

- 4

- 11

-

"So when threading is used, there is only a single operating system level thread while python creates pseudo-threads which are completely managed by threading itself but are essentially running as a single process. " That is not true. Python threads are _real_ OS-threads. What you are describing are [green threads](https://en.wikipedia.org/wiki/Green_threads), Python does not use that. It's just that a thread needs to hold the GIL to execute Python-bytecode which makes thread-execution sequential. – Darkonaut Feb 13 '19 at 16:09

-

_Now in case of self-contained instances of execution, you can instead opt for pool. But in case of overlapping data, where you may want processes communicating you should use multiprocessing.Process._ What pool? The multiprocessing library has a Pool, so this doesn't make much sense. – AMC Feb 09 '20 at 16:22

MULTIPROCESSING

- Multiprocessing adds CPUs to increase computing power.

- Multiple processes are executed concurrently.

- Creation of a process is time-consuming and resource intensive.

- Multiprocessing can be symmetric or asymmetric.

- The multiprocessing library in Python uses separate memory space, multiple CPU cores, bypasses GIL limitations in CPython, child processes are killable (ex. function calls in program) and is much easier to use.

- Some caveats of the module are a larger memory footprint and IPC’s a little more complicated with more overhead.

MULTITHREADING

- Multithreading creates multiple threads of a single process to increase computing power.

- Multiple threads of a single process are executed concurrently.

- Creation of a thread is economical in both sense time and resource.

- The multithreading library is lightweight, shares memory, responsible for responsive UI and is used well for I/O bound applications.

- The module isn’t killable and is subject to the GIL.

- Multiple threads live in the same process in the same space, each thread will do a specific task, have its own code, own stack memory, instruction pointer, and share heap memory.

- If a thread has a memory leak it can damage the other threads and parent process.

Example of Multi-threading and Multiprocessing using Python

Python 3 has the facility of Launching parallel tasks. This makes our work easier.

It has for thread pooling and Process pooling.

The following gives an insight:

ThreadPoolExecutor Example

import concurrent.futures

import urllib.request

URLS = ['http://www.foxnews.com/',

'http://www.cnn.com/',

'http://europe.wsj.com/',

'http://www.bbc.co.uk/',

'http://some-made-up-domain.com/']

# Retrieve a single page and report the URL and contents

def load_url(url, timeout):

with urllib.request.urlopen(url, timeout=timeout) as conn:

return conn.read()

# We can use a with statement to ensure threads are cleaned up promptly

with concurrent.futures.ThreadPoolExecutor(max_workers=5) as executor:

# Start the load operations and mark each future with its URL

future_to_url = {executor.submit(load_url, url, 60): url for url in URLS}

for future in concurrent.futures.as_completed(future_to_url):

url = future_to_url[future]

try:

data = future.result()

except Exception as exc:

print('%r generated an exception: %s' % (url, exc))

else:

print('%r page is %d bytes' % (url, len(data)))

ProcessPoolExecutor

import concurrent.futures

import math

PRIMES = [

112272535095293,

112582705942171,

112272535095293,

115280095190773,

115797848077099,

1099726899285419]

def is_prime(n):

if n % 2 == 0:

return False

sqrt_n = int(math.floor(math.sqrt(n)))

for i in range(3, sqrt_n + 1, 2):

if n % i == 0:

return False

return True

def main():

with concurrent.futures.ProcessPoolExecutor() as executor:

for number, prime in zip(PRIMES, executor.map(is_prime, PRIMES)):

print('%d is prime: %s' % (number, prime))

if __name__ == '__main__':

main()

- 7,858

- 3

- 52

- 69

Threads share the same memory space to guarantee that two threads don't share the same memory location so special precautions must be taken the CPython interpreter handles this using a mechanism called GIL, or the Global Interpreter Lock

what is GIL(Just I want to Clarify GIL it's repeated above)?

In CPython, the global interpreter lock, or GIL, is a mutex that protects access to Python objects, preventing multiple threads from executing Python bytecodes at once. This lock is necessary mainly because CPython's memory management is not thread-safe.

For the main question, we can compare using Use Cases, How?

1-Use Cases for Threading: in case of GUI programs threading can be used to make the application responsive For example, in a text editing program, one thread can take care of recording the user inputs, another can be responsible for displaying the text, a third can do spell-checking, and so on. Here, the program has to wait for user interaction. which is the biggest bottleneck. Another use case for threading is programs that are IO bound or network bound, such as web-scrapers.

2-Use Cases for Multiprocessing: Multiprocessing outshines threading in cases where the program is CPU intensive and doesn’t have to do any IO or user interaction.

For More Details visit this link and link or you need in-depth knowledge for threading visit here for Multiprocessing visit here

- 1,868

- 2

- 16

- 34

Process may have multiple threads. These threads may share memory and are the units of execution within a process.

Processes run on the CPU, so threads are residing under each process. Processes are individual entities which run independently. If you want to share data or state between each process, you may use a memory-storage tool such as Cache(redis, memcache), Files, or a Database.

- 8,890

- 22

- 79

- 154

-

_Processes run on the CPU, so threads are residing under each process._ How does the first part of that sentence lead to the second part? Threads run on the CPU too. – AMC Feb 09 '20 at 16:24

As I learnd in university most of the answers above are right. In PRACTISE on different platforms (always using python) spawning multiple threads ends up like spawning one process. The difference is the multiple cores share the load instead of only 1 core processing everything at 100%. So if you spawn for example 10 threads on a 4 core pc, you will end up getting only the 25% of the cpus power!! And if u spawn 10 processes u will end up with the cpu processing at 100% (if u dont have other limitations). Im not a expert in all the new technologies. Im answering with own real experience background

- 29

- 6

-

_In PRACTISE on different platforms (always using python) spawning multiple threads ends up like spawning one process._ They don't have the same use cases at all though, so I'm not sure I agree with that. – AMC Feb 09 '20 at 16:28